The Cooling Equation: Why AI Demands Liquid

Liquid cooling isn’t a luxury, it’s the physics of the future. Those who master it will define the pace, scale, and sustainability of the AI revolution.

When Air Reaches Its Limit. Liquid Cooling Takes Lead.

Established shortly after ChatGPT’s launch, with the support of Wistron, Foxconn, and Pegatron, Zettabyte emerged to combine the world’s leading GPU and data center supply chain with a sovereign-grade, neutral software stack.

Established shortly after ChatGPT’s launch, with the support of Wistron, Foxconn, and Pegatron, Zettabyte emerged to combine the world’s leading GPU and data center supply chain with a sovereign-grade, neutral software stack.

As artificial intelligence scales at unprecedented speed, the world’s data centers are being pushed beyond their physical and thermal limits. The rise of GPU-dense clusters,the engines behind generative AI, large language models, and advanced analytics,has introduced a new constraint no algorithm can optimize away: heat.

Traditional air-cooled data centers were never built for this kind of power density. Legacy racks once managed 20–30 kW of thermal load; modern AI workloads routinely demand 100–150 kW per rack or more. Fans, raised floors, and chilled air simply can’t keep pace. The result is inefficiency, wasted energy, and throttled performance.

This is where liquid cooling becomes not just a technical upgrade, but a strategic imperative.

1. From Air to Liquid: A Physics-Driven Shift

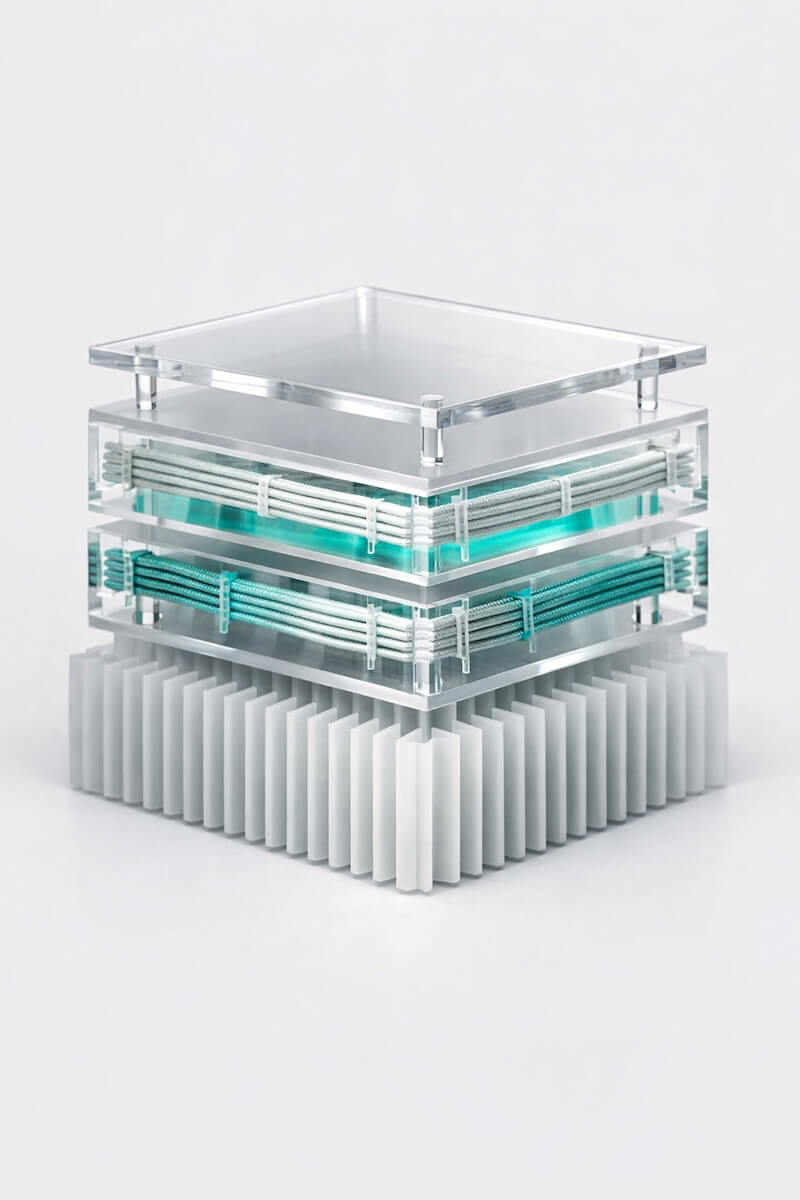

Air cooling moves heat through volume; liquid cooling moves heat through contact. Water and engineered coolants conduct heat up to 3,000 times more effectively than air,enabling GPUs and CPUs to operate at peak efficiency without thermal throttling.

By bringing the coolant directly to the heat source, through cold plates, immersion baths, or direct liquid loops, data centers can reduce energy used for cooling by 30–50%,while achieving higher rack density in the same footprint. That’s not just efficiency, it’s infrastructure liberation.

2. Unlocking Density, Performance, and Longevity

Every watt saved in cooling is a watt that can be redirected to computation.

Liquid cooling allows operators to stack more GPUs per rack, reduce spacing requirements, and sustain consistent performance under continuous load.

Components run cooler and last longer, while Power Usage Effectiveness (PUE) improves dramatically.

In the era of AI superclusters, density equals advantage. The ability to pack more compute into less space, without overheating, defines who leads in scalability and cost per inference.

3. Sustainability and the New Energy Equation

AI is power hungry, but sustainability can’t be optional. Liquid cooling directly supports green data center initiatives by reducing overall energy consumption and enabling waste heat recovery, where excess thermal energy is reused to heat nearby buildings or industrial systems.

In regions where energy prices and ESG standards are rising, this isn’t just good practice,it’s economic survival. Efficient thermal design is becoming as critical to compliance as it is to compute.

4. Reliability at Scale: Air Falters Where Liquid Stabilizes

As GPUs draw more power, thermal stability becomes a mission-critical factor.

Air cooling struggles with temperature uniformity, creating hotspots that degrade performance and shorten component lifespan. Even slight fluctuations can trigger throttling, cutting efficiency by double digits.

Liquid cooling eliminates this variability by maintaining direct, consistent contact between coolant and heat source. Systems stay within their optimal thermal envelope,workloads remain stable, and uptime improves dramatically.

In short: airreacts to heat; liquid controls it.

5. Operational Efficiency and Space Optimization

Air-cooled systems demand vast airflow, meaning more fans, ducts, and physical spacing to prevent recirculation. The result: lower rack density and higher operational overhead. Every cubic meter of air moving through a facility consumes energy and space that could otherwise be dedicated to compute.

Liquid-cooled designs reverse that equation. They enable denser layouts, quieter operations,and smaller mechanical footprints. Cooling infrastructure shrinks, freeing capacity for what matters most, GPUs, not fans.

The outcome is greater compute-per-square-meter and a clear path to economic and spatial efficiency.

That’s a Cool Wrap

Air cooling was built for a different era, one of servers, not supercomputers.

Liquid cooling doesn’t just solve the heat problem; it redefines the economics, reliability, and sustainability of compute itself.