Previous Post

Sovereign Data Center Security: Essential Dos and Don'ts for Your Organization

zFABRIC: Unified Networking for AI at Every Scale

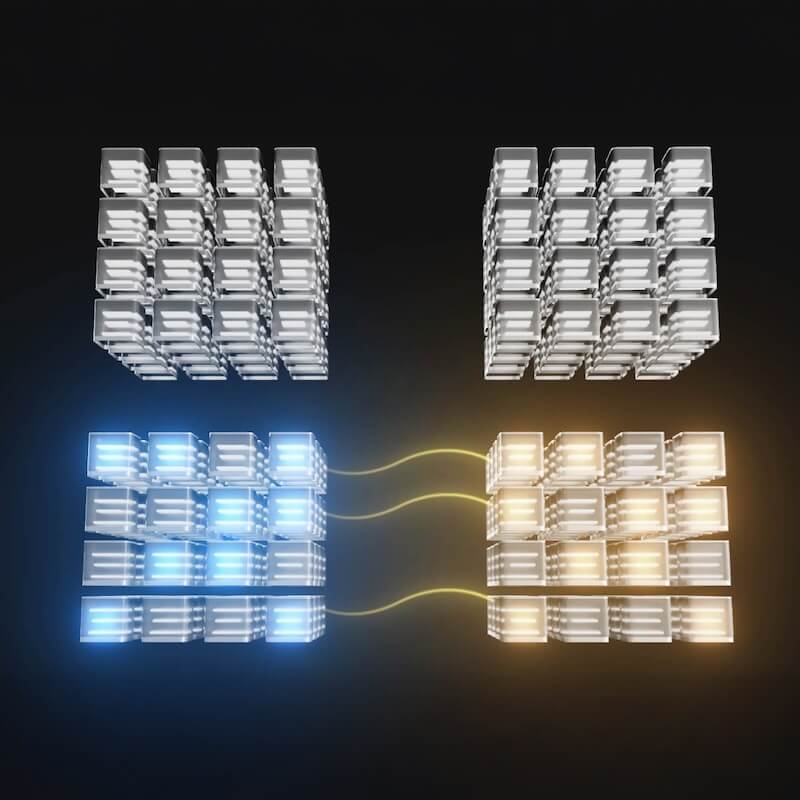

Traditional networking stacks (such as InfiniBand and RoCE) address parts of this challenge, but none deliver a truly end-to-end, workload-aware, topology-aware, multi-data center fabric.

zFABRIC, part of the zSUITE Platform, is designed as the AI-native networking fabric that unifies all three scaling dimensions into a single, software-defined control plane.

Established shortly after ChatGPT’s launch, with the support of Wistron, Foxconn, and Pegatron, Zettabyte emerged to combine the world’s leading GPU and data center supply chain with a sovereign-grade, neutral software stack.

Established shortly after ChatGPT’s launch, with the support of Wistron, Foxconn, and Pegatron, Zettabyte emerged to combine the world’s leading GPU and data center supply chain with a sovereign-grade, neutral software stack.

As AI systems grow to trillion-parameter scale, GPU clusters must support three simultaneous capabilities:

Existing networking technologies, including InfiniBand and RoCE, solve fragments of today’s scaling challenges but stop short of full unification. zFABRIC, within the zSUITE Platform, delivers an AI-native fabric that brings together all three scaling dimensions under a single, software-defined control plane.

zFABRIC unifies:

zFABRIC transforms GPU networks from static transport layers into an intelligent, dynamic resource optimized explicitly for AI training and inference workloads.

1. AI Scaling: The Three Dimensions Reframed by zFABRIC

Modern AI infrastructure expands along three axes:

| Scaling Dimension | Description | Challenges | zFABRIC’s Role |

|---|---|---|---|

| Scale-Up | GPU-to-GPU interconnect inside a server or cabinet | Topology complexity, asymmetric bandwidth | Auto-discovery, affinity optimization |

| Scale-Out | Cluster-level expansion within a data center | Tail latency, congestion, multi-tenant fairness | Deterministic Ethernet + AI congestion control |

| Scale-Across | Connecting multiple data centers | WAN latency, routing, bandwidth | Multi-DC control plane + job-aware routing |

zFABRIC binds these three layers into a unified operational fabric.

2. zFABRIC for Scale-Up: Making GPU Topology First-Class

Scale-up networks (NVLink / NVSwitch / UALink / PCIe) are essential for:

2.1 Industry Background

Scale-Up fabrics feature:

However, training frameworks often treat GPU topologies as opaque, causing:

2.2 zFABRIC’s Role in Scale-Up

(A) zFABRIC Topology Service

Automatically learns:

(B) zFABRIC Intranode Scheduler

Uses real-time telemetry to determine:

(C) ZCCL Topology-Aware Collectives

zFABRIC’s collective library builds optimized rings and trees based on:

Scale-Up becomes visible and programmable, not a hidden hardware detail.

3. zFABRIC for Scale-Out: Deterministic AI Ethernet Fabric

Scale-Out connects thousands of GPUs across a data hall. Challenges include:

zFABRIC replaces fragile manual tuning with an autonomous AI fabric.

3.1 zFABRIC DCQCN+ - Advanced AI Congestion Control

Enhances RoCEv2 with:

Benefits:

3.2 zFABRIC Fabric Controller

Provides an intent-based network operating plane:

3.3 ZCCL (zFABRIC Collective Communication Library)

Designed for AI training traffic:

Outperforms NCCL/RCCL in large-scale clusters by 5–18%.

4. zFABRIC for Scale-Across: Multi–Data Center AI Fabric

AI factories increasingly span multiple data centers due to:

Standard networking lacks:

zFABRIC introduces a global fabric controller that extends training workflows across multiple regions.

4.1 zFABRIC DCI Fabric (ZDF)

zFABRIC’s scale-across layer includes:

4.2 Global Fabric Controller (GFC)

Handles:

This allows AI operators to treat multiple data centers as one logical GPU pool.

5. Optional Layer: zFABRIC + OCS for AI Optical Super-Fabrics

Although ZFABRIC does not require OCS, it integrates OCS as a performance accelerator for clusters with large, predictable flows. AI workloads produce:

These are ideal for circuit-switched optics.

5.1 Why zFABRIC Integrates OCS

OCS provides:

zFABRIC’s OCS Integration Layer can:

This transforms the optical network into an active component of the AI training engine.

6. zFABRIC VS Traditional Fabrics

| Capability | InfiniBand | Standard RoCE | zFABRIC |

|---|---|---|---|

| Latency Determinism | Medium | Low | High (DCQCN+) |

| Collective Optimization | Limited | Limited | Topology-aware (ZCCL) |

| Multi-Tenant Fairness | Weak | Weak | Strong isolation |

| Multi-DC Awareness | Minimal | None | Native Scale-Across |

| OCS Integration | No | No | Optional, built-in |

| Operational Complexity | High | Very High | Minimal, intent-based |

7. The Road Ahead: zFABRIC for Global AI Factories

AI factories will soon consist of:

zFABRIC is built for this scale:

zFABRIC becomes the “AI-era network OS” that coordinates compute across nodes, clusters, and regions.

8. Conclusion

zFABRIC delivers the first unified AI networking fabric spanning:

With a topology-aware collective engine, autonomous congestion control, and a global networking control plane, zFABRIC allows AI operators to:

zFABRIC transforms AI networking from a passive infrastructure component into a software-defined, intelligent, and scalable performance engine.