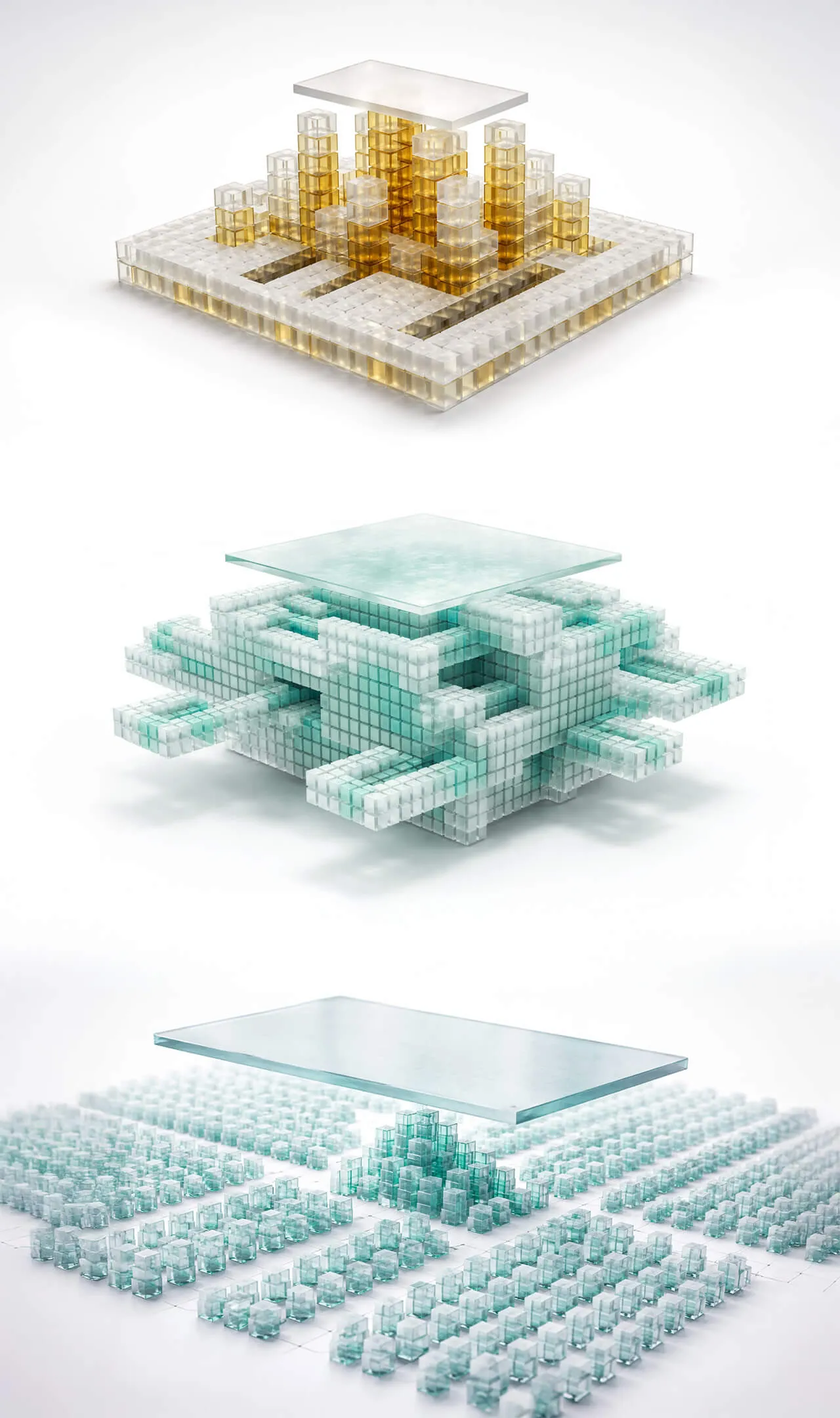

zWARE™ maximizes GPU performance and streamlines operations across your AI infrastructure.

It ensures every GPU delivers peak output with minimal idle time.

zWARE™ isn’t just an AI scheduler, it’s a complete command center for GPU-centric infrastructure. It goes beyond job scheduling to orchestrate compute, networking, and system health in real time across your entire cluster.

zWARE™ delivers these core capabilities to keep your AI workloads running at peak performance:

Have more questions?

Here are quick answers to common inquiries about zWARE™

zWARE functions like the advanced electrical systems of a high-performance race car, delivering essential real time data to allow the driver to make the best decisions. Without this visibility, even the most skilled driver cannot perform optimally. In the same way, AIDC operators controlling multi-million-dollar GPU clusters need zWARE to see, respond, and optimize in real time.

zWARE is designed for anyone that operates GPU based AI infrastructure. This includes sovereigns, enterprises, data center operators, telecos, research institutions, and AI focused companies running training, fine tuning, or large-scale inference workloads. It is suited for on-premises and multisite environments where control, efficiency, and operational reliability are required.

zWARE™ can be adopted in three flexible ways: deploy on existing GPU clusters (bare-metal or VMs), bundle with new GPU hardware via our OEM partners, or use it as a managed service through Zettabyte’s Network Operations Center. In all cases, you keep full control over your hardware and data.

zWARE is not merely an AI scheduler, it is a comprehensive, converged control plane for GPU centric AI infrastructure. It extends well beyond job scheduling to operate as a full AI Digital Command Center integrating orchestration, observability, and operational control across the entire AI stack. zWARE incorporates ultra fine grain to include adjacent telemetry spanning compute, networking, power, cooling, and environmental signals. ZWARE also supports multi-cluster federation across heterogeneous GPU domains; performs intelligent workload-to-hardware matching based on real-time system state; and delivers continuous operator alerting and feedback loops. zWARE is designed for sovereign-grade deployments, where data isolation, auditability, and operational reliability are mandatory, not optional.

zWARE integrates directly with existing hardware and networking environments, without changing GPU ownership or data control. GPU owners can adopt zWARE in three ways: by deploying it on existing bare-metal or virtualized GPU clusters; by bundling it with new GPU infrastructure through Zettabyte’s OEM partners; or by operating it as a managed service supported by Zettabyte’s AI Network Operations Center. This flexibility allows organizations to bring systems online quickly while maintaining control and operational continuity.

zWARE includes Zettabyte’s own optimized Kubernetes. It incorporates: (1) an optimized Kubernetes distribution hardened and tuned for GPU intensive AI workloads; (2) a custom, AI-aware scheduler and orchestrator that operates beyond native Kubernetes abstractions; and (3) GPU-level resource awareness with fine-grained control over allocation, performance states, and operational constraints. Together, these capabilities allow zWARE to operate large-scale, high density GPU clusters, enabling deterministic workload placement, sustained performance, and reliable operations in environments where standard container orchestration alone is insufficient.

zWARE increases effective GPU utilization by ensuring that available compute is used consistently and predictably. By reducing idle capacity, avoiding fragmentation, and responding quickly to operational issues, zWARE enables more tokens to be produced from the same infrastructure. Customers typically achieve 30–40% higher effective GPU utilization, allowing them to deliver results faster while lowering the cost per training run or inference job.

zWARE is designed for organizations that need to operate AI infrastructure on their own terms. Unlike hyperscaler platforms, it preserves GPU and data ownership while providing full visibility and control across the infrastructure. Compared to open-source stacks, zWARE is production ready by design, delivering consistent performance, integrated operations, and measurable improvements in GPU utilization and throughput. This allows teams to run AI workloads more efficiently, scale with confidence, and reduce the long-term cost of operating high-density GPU environments. zWARE also allows on-premise deployments to scale and obtain additional capacity or resources through the zSUITE ecosystem.