Real Economics of AI Infrastructure

AI workloads now push power, cooling, and utilization to their physical limits. Inefficiency has become the new cost curve. Sovereigns and enterprises need systems that make AI scale possible.

The Drivers Behind the Shift

Established shortly after ChatGPT’s launch, with the support of Wistron, Foxconn, and Pegatron, Zettabyte emerged to combine the world’s leading GPU and data center supply chain with a sovereign-grade, neutral software stack.

Established shortly after ChatGPT’s launch, with the support of Wistron, Foxconn, and Pegatron, Zettabyte emerged to combine the world’s leading GPU and data center supply chain with a sovereign-grade, neutral software stack.

The world is experiencing an extraordinary surge in demand for AI compute. NVIDIA's Q3 2024 earnings showed data center revenue crossing 22 billion dollars in a single quarter, with CEO Jensen Huang calling this moment the next industrial revolution.

Behind the growth is an uncomfortable reality very few companies discuss. AI infrastructure is becoming dramatically more expensive, more power hungry, and more operationally complex unless you build it correctly.

At Zettabyte, we have spent the recent years designing dense GPU clusters, liquid cooled racks, and software optimized data centers across Asia.

What we have learned is clear, the economics of AI infrastructure in 2025 will be defined by three forces cost, efficiency, and sustainability.

1. The New Cost Curve: Hardware Is Expensive but Inefficiency Is Worst

SemiAnalysis notes that total cost per GPU cluster is rising faster than hardware performance gains. AI infrastructure spending is no longer dominated by GPUs. Power, cooling, networking, and unused GPU capacity are becoming major cost drivers.

Many enterprises still run their clusters at 30 to 40 percent real utilization, which means millions of dollars in unused GPU hours every quarter.

Improving scheduling and orchestration can raise utilization by 20 to 40 percent. In 2025, the best way to reduce cost is to use the GPUs you already own more efficiently.

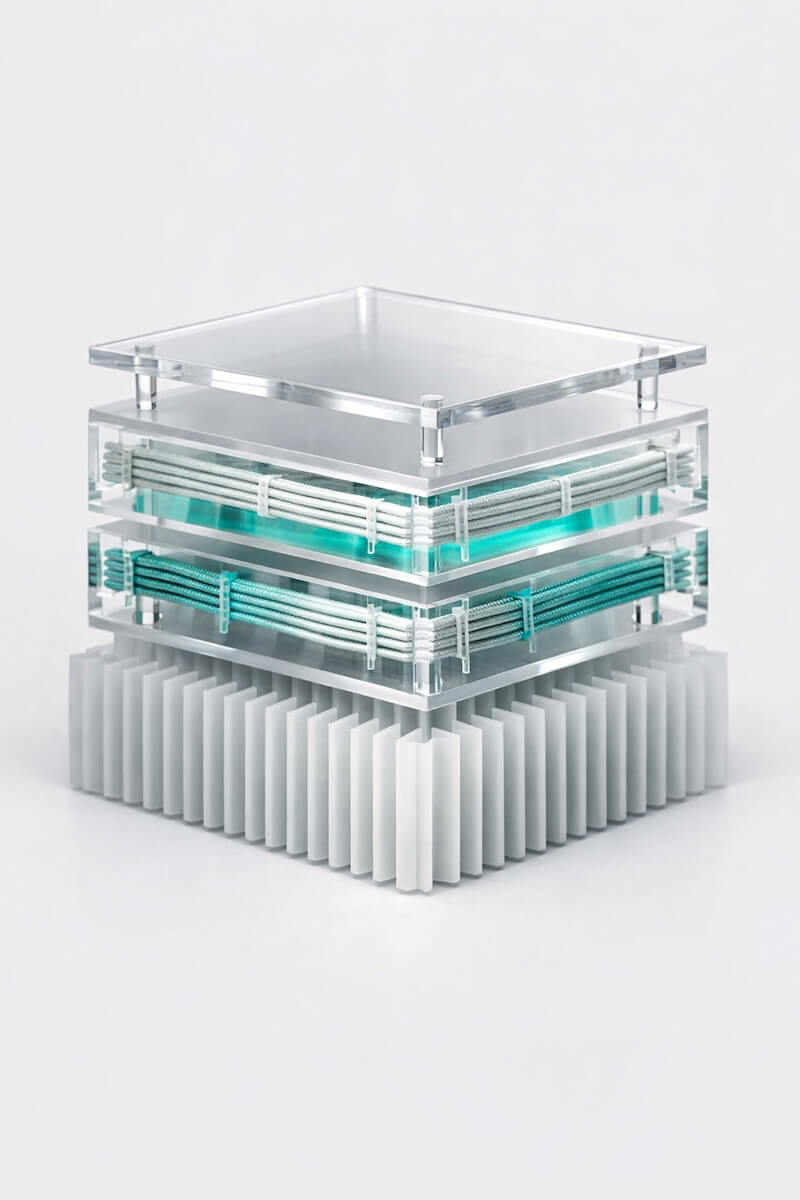

2. Power Density Is Becoming the Real Bottleneck

- Legacy air cooled racks top out around 20 to 30 kW.

- Modern AI racks require 100 to 150 kW or more.

- SemiAnalysis summarizes this trend clearly legacy data centers are becoming economically obsolete.

- Cooling and power upgrades now represent a large part of AI infrastructure cost.

- This is why Zettabyte invests in liquid cooling and high density retrofits.

3. Sustainability Is No Longer a PR Topic It Is a Profit Topic

- Air cooled AI clusters use 20 to 40 percent more power than liquid cooled systems.

- Over a five year period, this results in tens of millions of dollars in additional operating cost.

- Global operators like Google, AWS, and Meta are investing aggressively in cooling efficiency because it directly increases profit.

- Higher utilization, better scheduling, and optimized networking reduce wasted power and improve return on investment.

4. The Rise of the Software Defined GPU Data Center

- Industry voices agree that future AI performance gains will come from systems, networking, and software layers more than from hardware alone.

- Dylan Patel of SemiAnalysis notes that orchestration and system design will drive the next generation of AI improvement.

- Jay Goldberg from Digits to Dollars comments that everyone is buying GPUs but very few know how to operate them well.

- zWARE and zFABRIC were built for this new reality providing scheduling, orchestration, networking efficiency, and fast cluster recovery.

5. What This Means for Enterprises and Sovereigns in 2025

Before building or renting AI infrastructure, you would need to ask:

- What is the real utilization rate.

- Can the facility support 100 kW racks.

- Is the software stack optimized end to end.

- Are there measurable efficiency and sustainability metrics.

- Can the system scale without doubling power cost.

The organizations that adopt efficiency first design will gain a major advantage.

Efficiency Is the New Compute

The era of cheap AI compute is over. The future belongs to efficient, sustainable, software optimized AI infrastructure. At Zettabyte, we aim to build the worlds most efficient GPU data centers by improving utilization, reducing waste, and enabling enterprises to do more with fewer resources.

In 2025, efficiency will define the winners of the AI race.