AIDC Networking

What began with Scale-Up and Scale-Out architectures has expanded into Scale-Across, where distributed sites operate as one. These three dimensions now define the blueprint for performance, resilience, and efficiency in large-scale AI.

The Essentials, Simplified

In modern AI Data Centers (AIDC), “scaling” is the central theme that defines computing and networking evolution. Traditionally, two major expansion models have shaped data center design:

- Scale-Up: vertically increasing the capability of a single node or system

- Scale-Out: horizontally expanding by adding more nodes within a data center

However, with the explosive rise of Generative AI (AIGC) and trillion-parameter models, NVIDIA introduced a third dimension:

- Scale-Across: expanding across geographically distributed data centers to build GW-level AI factories

Together, these three models form the foundation of today’s AI compute and networking architecture.

Outlook: AI Factories of the Coming Decade

Established shortly after ChatGPT’s launch, with the support of Wistron, Foxconn, and Pegatron, Zettabyte emerged to combine the world’s leading GPU and data center supply chain with a sovereign-grade, neutral software stack.

Established shortly after ChatGPT’s launch, with the support of Wistron, Foxconn, and Pegatron, Zettabyte emerged to combine the world’s leading GPU and data center supply chain with a sovereign-grade, neutral software stack.

Scale-Up and Scale-Out: The Dual Engines of AI Networking

1. Definitions

Scale-Up (Vertical Expansion)

Scale-Up improves the performance of a single compute node by increasing GPU count, memory bandwidth, or chip-to-chip interconnect performance.

Key Characteristics:

- Tightly coupled hardware

- Extremely high bandwidth

- Ultra-low latency (nanoseconds)

Typical Technologies:

- NVIDIA NVLink / NVSwitch

- AMD UALink (evolved from Infinity Fabric)

- Broadcom Scale-Up Ethernet (SUE)

Scale-Out (Horizontal Expansion)

Scale-Out increases total compute capacity by adding more servers or racks connected via RDMA-capable networks.

Key Characteristics:

- Cost-effective

- Elastic scalability

- Higher latency (microseconds to milliseconds)

Typical Deployments:

- RoCEv2 Ethernet clusters

- InfiniBand HDR / NDR pods

- Multi-server AI Pods (e.g. DGX SuperPOD)

2. Fundamental Differences in Application Scenarios

| Workload Type | Communication Pattern | Best Scaling Method |

|---|---|---|

| Tensor Parallelism | Heavily memory-dependent, frequent small exchanges | Scale-Up |

| Expert Parallelism (MoE) | High bandwidth + low latency | Scale-Up |

| Data Parallelism | Bulk gradient exchanges | Scale-Out |

| Pipeline Parallelism | Stage-level messaging | Scale-Out |

Scale-Up networks use memory-semantic load–store communication, while Scale-Out relies on message-passing semantics. This fundamental difference makes the two architectures inherently non-interchangeable.

Technical Differences Between Scale-Up and Scale-Out

Latency Composition: Static vs Dynamic

Static Latency

- Defined by SerDes, FEC, and chip design

Dynamic Latency

- Caused by congestion, queue buildup, and flow control

Scale-Up

- Bypasses traditional L2/L3 network stacks

- Uses credit-based flow control and link-level retransmission

- Avoids DSP/CDR processing found in optical modules

- Typical latency: < 100 ns

Scale-Out

- Requires congestion control (ECN / PFC / DCQCN / UEC)

- Multi-hop routing increases jitter and tail latency

- Typical latency: hundreds of ns to milliseconds

Interconnect Media: Copper vs Optics

| Item | Scale-Up | Scale-Out |

|---|---|---|

| Medium | Copper cables | Optical modules |

| Bandwidth | TB/s-level | 100–800 Gb/s, up to several Tb/s |

| Latency | Extremely low | Higher (due to DSP, routing, etc.) |

| Power | Very low | Higher (optical DSP power) |

At scale: Scale-Up uses copper, Scale-Out uses optics.

Case Study: DGX A100 vs DGX H100 (256-GPU Pod)

- DGX A100 relies on InfiniBand → Scale-Out cluster

- DGX H100 adds a second-tier NVSwitch → cabinet-level Scale-Up system

Both provide 256 GPUs, but communication characteristics differ drastically.

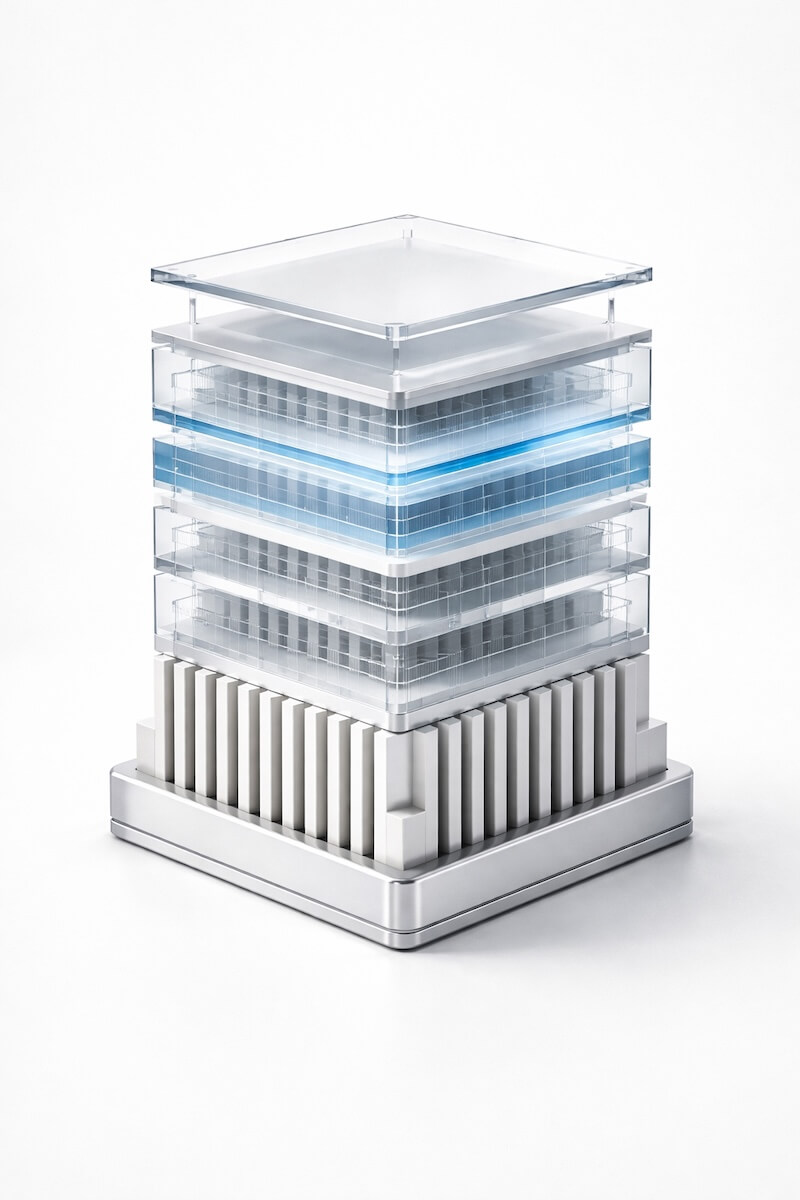

Scale-Up at Its Peak: NVIDIA NVL72 SuperNode

NVIDIA NVL72 integrates 36 Grace CPUs and 72 Blackwell GPUs into a liquid-cooled cabinet, forming the first true cabinet-scale SuperNode.

Scale-Up Interconnect Inside NVL72

NVLink 5 + NVSwitch Characteristics:

- Per GPU: 1.8 TB/s bidirectional bandwidth

- Total (72 GPUs): 129.6 TB/s

- 5,184 differential-pair copper connections

- Full-mesh connectivity across the cabinet

Performance Highlights:

- 18× higher bandwidth than 800G RDMA

- Eliminates ~100 ns of optical DSP/CDR latency

- Unified giant memory system:

- 13.5 TB HBM

- 17 TB CPU LPDDR5X via NVLink-C2C

NVL72 behaves as a multi-server, cabinet-level unified compute node.

Scale-Out Expansion Beyond the Cabinet

A SuperPOD of 8× NVL72 units (576 GPUs) is built by:

- Equipping each GPU tray with four 800G CX8 RDMA NICs

- Connecting cabinets via InfiniBand or RoCEv2

Two-Tier Network Architecture:

- Scale-Up inside each cabinet

- Scale-Out across cabinets within the data center

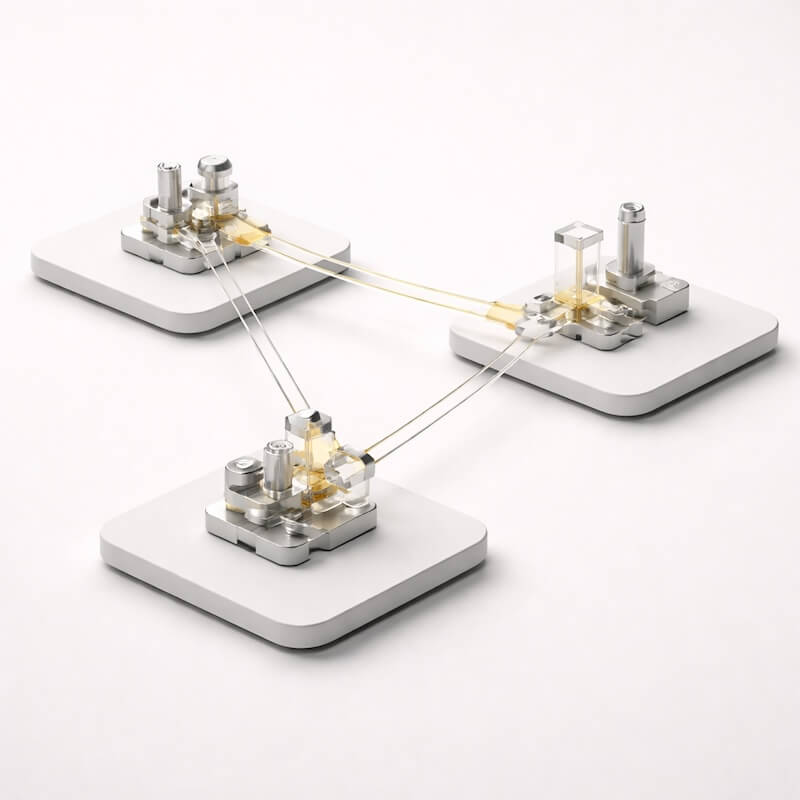

Scale-Across: Multi-Data-Center AI

Scale-Across extends AI networking beyond a single physical site.

Why Scale-Across Is Necessary

Single data centers face hard limits:

- Power availability

- Cooling capacity

- Physical land constraints

Example:

- One NVL72 consumes ~120 kW

- A 1-GW AI factory requires ~8,300 cabinets

This is infeasible within a single facility.

NVIDIA Spectrum-XGS

Key Components:

- ConnectX-8 and BlueField-3 SuperNICs

- 800G / 1.6T optical modules

- ZR / ZR+ coherent optics (80+ km DCI)

- Hollow-Core Fiber (HCF)

HCF Benefits:

- ~30% lower latency

- ~50% lower attenuation

Scale-Across introduces a new inter-DC communication layer on top of traditional L3 architectures.

Enabling Optical Technologies

| Technology | Role |

|---|---|

| Hollow-Core Fiber | Ultra-low latency transport (light travels in air) |

| Coherent Transceivers | High-speed long-haul inter-DC communication |

| Optical Circuit Switches (OCS) | Massive bandwidth + port density, ideal for Spine/DCI tiers |

OCS is projected to capture >50% of traditional switch market share over time.

Systematic Comparison

| Dimension | Scale-Up | Scale-Out | Scale-Across |

|---|---|---|---|

| Physical Scope | Server / chassis / cabinet | Within one data center | Across multiple data centers |

| Communication Semantics | Load–store (memory semantic) | Message passing | Cross-region unified fabric |

| Latency | ns | μs–ms | ms (optimized) |

| Bandwidth | TB/s | 100–800 Gb/s | Depends on optical modules |

| Medium | Copper | Optical | Coherent optics / HCF |

| Key Technologies | NVLink, UALink, SUE | InfiniBand, RoCE, UEC | Spectrum-XGS, OCS |

| Bottlenecks | PCB area, cooling, power | Congestion control, topology | DCI cost, optical power |

| Main Use Cases | TP/EP | DP/PP | Giant-scale training, AI factories |

Final Thoughts

The three dimensions will define the architecture of next-generation AI factories:

- Scale-Up to build cabinet-level SuperNodes

- Scale-Across to unify multiple data centers into GW-scale compute fabrics

- Scale-Out to assemble mega-clusters inside a data center.

Future AI facilities will:

- Host millions of GPUs

- Consume multiple gigawatts of power

- Span multiple geographic regions

- Support AGI, robotics, autonomous systems, medical AI, and nation-scale LLMs.

Scale-Across is the foundational step toward this future.